Illustration

1: Stage theory model showing that information is stored and

processed in three stages.

Top-Down & Bottom-Up Processing

-Abhishek Malik

Y6020

I have tried to give brief introduction to Information Processing and then more specifically focused on Top-Down and Bottom-Up processing specifically in vision. I would start by providing brief historical context and what I understand by the Information Processing. Then I would go on to talk about Top-Down and Bottom-Up processing and their interaction to give rise to the initial interpretation of the world around us. I would also try to give brief overview of feature detectors in between. I would also attempt to provide an explanation as to why we see certain illusions and what processes goes on in our system interpret them wrongly.

George A. Miller presented two theoretical ideas that have become fundamental to information processing and cognitive psychology in his paper, one of the most highly cited papers in psychology, The Magical Number Seven, Plus or Minus Two published in 1956 in Psychological Review. He is also the founder of WordNet, a linguistic knowledge base that maps the way the mind stores and uses language. Anyways, without deviating we see that the first of the two ideas was chunking. He suggested that working memory capacity is limited, and elements or units like letters, numbers or words can remembered only in chunks of seven plus minus two elements. Later research has revealed the chunk size to be different depending on the type of element in chunk, for example the span for digits is seven while for letters it is six and for word it is five only. Second important idea that came out of his paper was that human mind can be modeled on computers for learning process. Like the computer human mind gathers and takes in information and it is followed by processes or encoding it, then organizing it in memory and this information is retrieved when needed.

Illustration

1: Stage theory model showing that information is stored and

processed in three stages.

In 1968, Atkinson and Shriffin gave stage theory model stating three stages of storing and processing of information as given in illustration 1. The sensory memory has capacity of holding information up to ½ second, while short-term memory is said to store information in 15 – 20 seconds range. Then the information stored in long-term memory is stored permanently.

In addition to the stage theory model of information processing, there are three more models that are widely accepted. The first is based on the work of Craik and Lockhart (1972) and is labeled the "levels-of-processing" theory. It proposes that different levels of elaboration are utilized to process information by a continuum from perception, through attention, to labeling, and finally, meaning. Two other models have been proposed as alternatives to the Atkinson-Shiffrin model: parallel-distributed processing and connectionistic. Parallel distributed processing model hypothesizes that information is processed in different regions of brain simultaneously as opposed to the sequential order stated by Atkinson Shriffin model. Connectionistic model proposed by Rumelhart and McClelland (1986) is an extension of parallel distributed processing model.

Now after the historical context we come to what we will be focusing on which when stated in reference to the illustration above would be dealing with the initial information processing during the transfer between sensory memory and working memory (short-term memory) in terms of sequential processing models i.e. just before we come up with first interpretation of our sensory input. In terms of parallel processing models we would mean the initial meaningful processing result we obtain from sensory input with all the biases from various connection applied.

First the sensory input is interpreted by feature detectors which respond to very specific elementary aspects of stimulus. At this point we should in brief look at feature detectors. The existence of feature detectors is based on evidence obtained by recording from single neurons in the visual pathways. It was found that many types of neuron responses do not correlate with straightforward physical properties of stimulus. Instead some specific patterns of excitation were required, often spatio-temporal patterns involving movements. The feature detectors and detection have important and more general role in perception and classification. Their significance can be better understood by studying the early attempts of computer scientists to recognize alphanumeric character. Initially they tried this by using templates for the specific characters, but it failed and performed badly in the task. The feature detection approach and then classification according to the features such as specific curves, lines, diagonals and their relative positioning provided much better results. Feature detection approach can be said to more efficient as it can be more abstractive, as represented in this example by font-invariant character recognition. Another question that arises is why do we have feature detectors for some specific features and not others, which is also arousing interest in this area. One view that is currently receiving some support is that this may be explained by sparse coding, this means that at a time only a small section of all units are active and yet in combination they are able to provide a sufficient representation of the scene effectively.

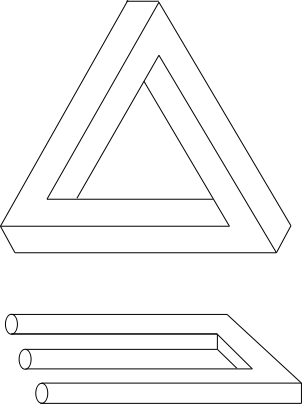

This all would be part of Bottom-Up processing, now we would consider Top-Down processing and it would be best if we study them in contrast which should lead to better understanding of both. But before proceeding further we need to get a few things cleared up first. Our perception can be said to be the immediate present- what is happening around us as conveyed by the pattern of light falling on our retina. Now the problem that arises is that each retinal 2-D image could be representation of many 3-D scenes. Since we rapidly perceive only one interpretation it would mean that we see more than just immediate information on retina. The highly accurate guesses and interpretation can be explained by their dependence and derivation from the rich wealth of knowledge and past experience. These are Top-Down influences that affect interpretation of the sensory information by expectation cultivated from past knowledge and experience. It is due to these influences that at times we see things differently from what they are, as in case of illusion which must have experienced by most of us. As examples, one can look at this video on illusion on faces drawings, here when the faces are turned one is not able to view the face earlier that easily and other face comes up naturally. This rotating hollow mask illusion creates perception of face on both sides even though one side should be hollow. Below in illustration 2 is image of two famous undecidable figures, the Penrose triangle and devil's tuning fork. Here we try to make sense of them due to our expectations. This should motivate us to explore the Top-Down processing further, and this what we do next and also contrast it with Bottom-Up processing.

Illustration

2: Penrose Triangle and Devil's Tuning Fork

I believe the Top-Down and Bottom-Up processing both take place simultaneously and with both going in hierarchical order in opposite directions should meet up in between. We will come back to this, but let us now try to define both of these processes. Bottom-Up processing can be said to be data-driven process as its goal is to accurately reflect the outside world. The interpretation is determined mostly by information gained from the senses. On the other hand Top-Down processing can be said to be schema-driven process (schema: patterns/concepts formed from earlier experiences). Here the interpretation is influenced by past knowledge and experiences. As an example of Bottom-Up processing we may consider reading where we first process and identify letters, then words, then phrases and then sentences in hierarchical order from bottom towards top. While for Top-Down processing we can consider example when after we are given some word we are able to guess next words or sentences in context by the influence of our past knowledge.

Now when we talk of the interaction between these processes, taking vision as an instance we can say that if processing can be divided into three levels, then at the lower-level there would just be analyzing of the image and feature detections. Then at mid-level processing we would consider different appearances of object taking in consideration different orientations, there may be various illuminations possible and there might be partial occlusion. Then it is in high-level where we would have object recognition and understand th complete scene with the various context effects guided by previous experiences. As far as the interaction goes it looks like after the initial processing at the lower-level the information gained is passed to both mid-level and high-level and thus both Top-Down and Bottom-Up processing take place simultaneously. Although by looking at the mistakes that we so often commit or the illusion given above we can say that Top-Down seems to dominate the final interpretation out of these interactions.

In explaining the illusions above we can point out that the interpretation that we see is clearly influenced by Top-Down processing results and influenced by the biases formed due to a lot of exposure to their occurrences of he features. Taking the case of how we see a face emerging even from the hollow side of the rotating mask, we can say that when we see facial features like nose in the hollow side image, as soon as information of these features reach high-level after lower-level analysis the interpretation of raised face dominates all other interpretations as we have always been exposed to these kind of faces in life and never see a sunken nose feature. So its difficult to overcome this bias or expectation and as a result we see a normal raised face instead of hollow side of mask.

Now I would like to conclude with the idea that attention can be explained on the basis of these processes. In attention we notice something which is new/odd or something of known pattern or in a way to be expected. Both this can be explained by considering that new things that are not expected to be there are interpreted by the Bottom-Up process and not influenced by the Top-Down process and thus come into our first interpretation of world from sensory inputs and we take notice of it. The other expected things that we want to attend to can be similarly explained by Top-Down processing which will influence the sensory input interpretations in a way that we perceive the expected things we intended to attend to.

References:

[1] George A. Miller- Wikipedia

[3] Theories Used in IS (Information Systems) Research Wiki

[4] Psychology- Keith J. Holyoak, MITECS.

[5] Feature Detectors- Horace Barlow, MITECS.

[6] Top-Down Processing in Vision - Patrick Cavanagh, MITECS.

[7] Bottom-Up Processing- everything2.com