What is Information?

The outcome of an unpredictable experiment.Ubiquitous examples

- Tossing a coin

- A Google request

- Code cracking

- Assembling a DNA sequence from a new species

- Collecting data from new horizons

Entropy

The less predictable (the more random) an experiment outcome is, the more informative it is. Information randomness is measured by entropy, a notion Shannon borrowed from physicists. Moreover, information can be compressed, say for storage, and Shannon proved that the limit to compression is entropy. Information can also be transmitted, say wirelessly, and Shannon proved that the limit to transmission is capacity.How do we collect information?

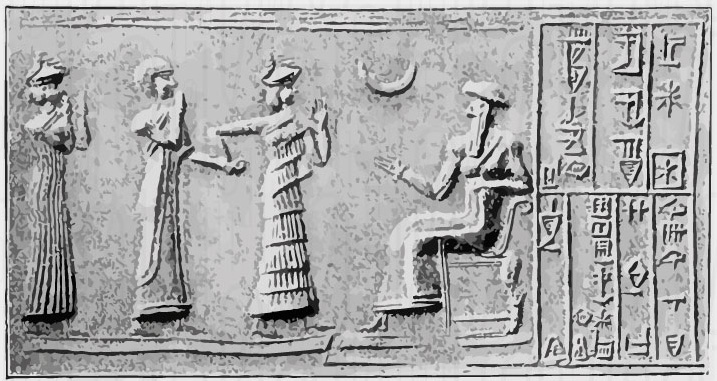

4000 BC - The first census

One ancient example of information gathering is the first census dating back to the Babylonians in 4000 BC. They used a census as an essential guide to know how much food they needed to find for each member of the population.1882 - Pre-Digital Era

- Prone to human errors when gathering, copying or analyzing the data

- Merging data from several distant sources incurred significant delay due to transport of paper notes or analog telecommunications

- Analyzing lots of data was a complex and long process

1997 – Today

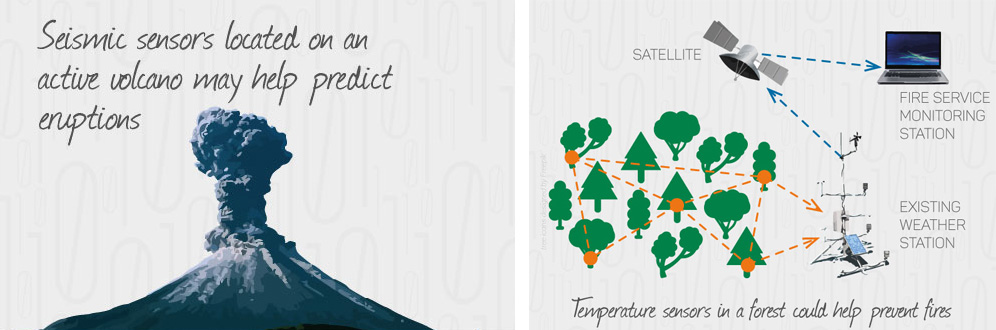

Wireless sensor networks

- Nowadays, we can automate the process of collecting information with electronic devices.

- A wireless sensor network, for example, is comprised of multiple devices that send measurements to a central control unit.

NASA - Radar waves

In its next mission, the Mars Reconnaissance Orbiter will seek liquid or frozen water in the first few hundreds of feet of Mars' crust. It will probe the subsurface using radar waves using a 15-25 megahertz frequency band in order to get the desired high-depth resolution.Internet

Antenna arrays

Smart meters

A smart meter is an electronic device that records electricity consumption in time and sends this information back to the utility provider for monitoring and billing.2016 - What is next?

- Use of sensors for monitoring different aspects of human life

- Smart houses with sensors in every appliance

- Body wireless sensor networks for healthcare monitoring

Big Data

How will we store the amounts of information we collect in the future?Today, within the last 200 seconds this is what happened on social media:

How do we compress information?

Data compression

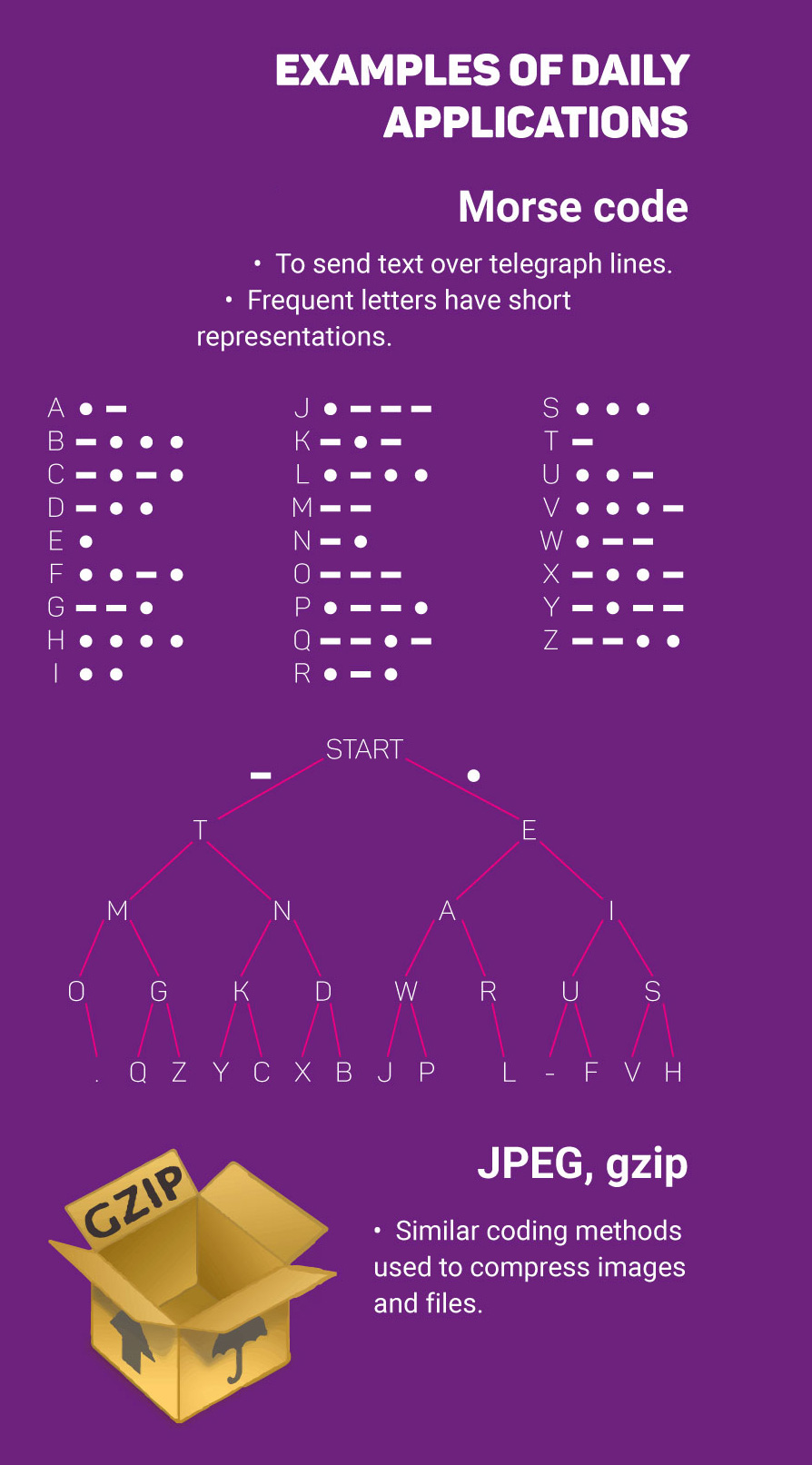

Morse code, invented in 1838, is an early example of data compression based on using shorter codewords for letters such as "e" and "t" that are more common in English. Modern work on data compression began in the late 1940s with the development of information theory.In 1949, Claude Shannon and Robert Fano devised a systematic way to assign codewords based on probabilities of blocks. An optimal method for doing this was then found by David Huffman in 1951. Early implementations were typically done in hardware, with specific choices of codewords being made as compromises between compression and error correction.

Why compress?

Compression enables you to optimize storage and communication such that you reduce the cost per item you store and/or share.If you take a photograph with your camera and save it in both bmp (uncompressed) and jpg (compressed - lossy), you will get a file size ratio of 16:1. This means that you can put 16 times more pictures on your memory card if you select the compressed format (jpg). You will also be able to add 16 times more pictures on your dropbox, and upload them 16 times faster on Facebook.

Future challenges

How to optimally compress data allowing some levels of distortion, when we do not make statistical assumptions on the data and with low complexity? (universal data compression with distortion).Compression for new applications.

How do we store information?

Current Storage Techniques

Hard-disk drive:

The hard-disk drive is a magnetic storage. Information is stored in the 2 possible orientations of a magnetic dipole.

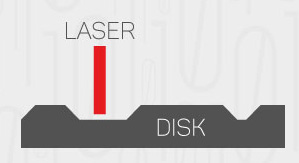

CD/DVD:

The CD/DVD is an optical disc. Information is stored as pits and lands on a reflective layer.BLU-RAY:

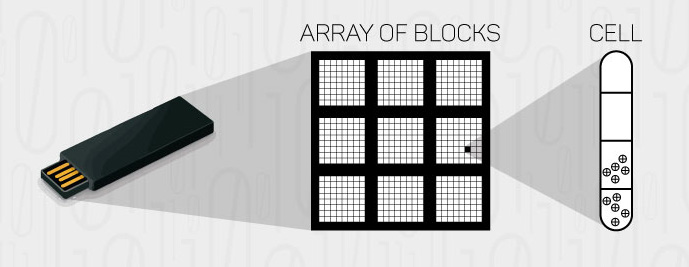

Blu-ray technology has a... blue laser. Blue light has a shorter wavelength than red, thus more information can be stocked on the same disc size.Flash SSD and RAM:

Each cell in flash memory stores information in terms of the amount of charge held. Charge cannot be reduced from an individual cell, an entire block has to be erased.

Some key enabling technologies

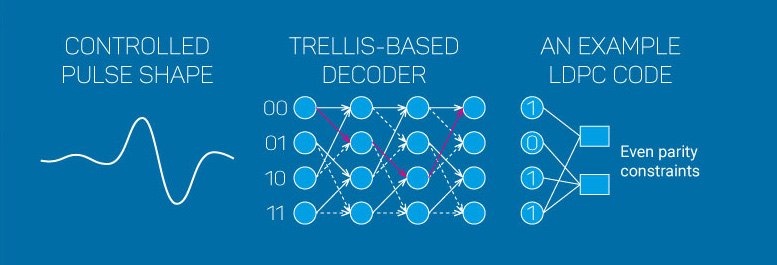

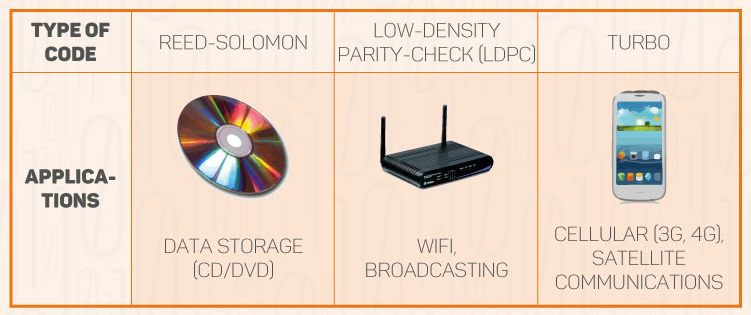

Error-Correcting Codes work by adding redundancy in the form of parity symbols and can range from simple replication to the more sophisticated Reed-Solomon and LDPC codes.

Storage technologies on the horizon

- DNA Storage: A single gram of DNA can store 455 exabytes of data for centuries.

- Phase Change Memory (PCM): Heating chalcogenide shifts it between a low and high resistance state.

- Memristors: Change in resistance of devices depends upon charge supplied.

- Holographic Storage: Storing data throughout the 3D volume of the storage medium.

New technologies - fresh challenges!

Two-dimensional Coding for magnetic and optical disks.- Novel techniques to handle correlated errors.

Flash Memory: Overcoming the charge leakage problem.

- Rank modulation codes store data in terms of the relative charge levels in different cells.

Data Centers: Efficient recovery from node failure.

- Regenerating codes minimize data download; Codes with Locality minimize number of helper nodes.

How do we protect information?

Redundancy in language

I "know" is mistyped in the same sentence as "snow", it is still likely that this error will be detected and corrected from the context, although "snow" is a valid word. Detection and correction may be harder or impossible if "know" is mistyped as "knew". As these examples show, redundancy is the key to recovery from errors.

Repetition coding

Redundancy through repetition coding was used since ancient times, mostly for strategic purposes. It is the simplest method of inserting redundancy into a message: repeat it. For example, in a triple repetition code for sending binary data, 0 and 1 are "encoded" as 000 and 111 respectively. A data block such as 010 is encoded into the code block 000 111 000. If the code block is corrupted by noise and received as 001 100 1111, we can detect that there are errors in the first two symbols. The first error can be corrected by "majority voting", the second word is "misdecoded" as 0, and the error in the third word goes undetected.Shannon capacity

Communication engineers have developed a variety of codes, such as LDPC and turbo codes, that achieve the efficiency limits set by Shannon theory. However, the field of error-correcting codes is still a vibrant research area, and is likely to remain so for many decades spurred by emerging applications.

Parity-chech codes

In a parity-chech code, each redundant bit is computed as a modulo-2 sum of some subset of data bits. The (7,4) Hamming code is an example of a parity-check code. Most codes used in today's communication systems are parity-check codes.State-of-the-art in coding

Today, error-correcting codes are used everywhere from wireless communications to mass storage devices to fiber-optics links. Some major classes of error-correcting codes and their application areas are given in the table below.

Future challenges

- How to know the capacity of even small networks

- Need to design codes for new applications

- How to jointly design how to process and encode information over networks

How do we secure information?

50 BC - Ancient Cryptography

Caesar Cipher:Introduced in 50 B.C. by Julius Caesar to protect messages of military significance; also used in 1915 by the Russian army. Each letter in the plain text is replaced by a letter some fixed number of positions down the alphabet

Very simple to implement, but easy to break

1882 - Computer era

One-Time Pad:Introduced in 1882 by F. Miller to secure the transmission of telegraphs; also used in World War II. Symmetric key encryption: the encryption and decryption keys are the same.

1997 - Modern cryptography

RSA (Rivest-Shamir-Adleman):Introduced in 1997 by Rivest-Shamir-Adleman for secure data transmission; widely employed nowadays.

Public key encryption: the encryption key is public to anyone, but only the receiving party has access to the decryption key. Uses short keys. Not proven to be unbreakable.

2016 - New opportunities in the horizon

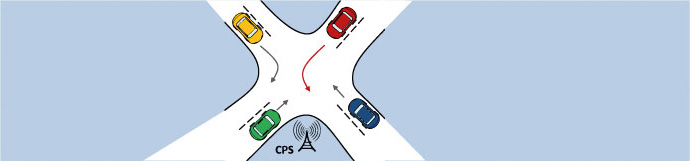

Exploit wireless medium properties to generate secret keys:

Challenges: Attractive targets to hackers.

Exploit properties of physical systems to design secure protocols for cyber-physical systems:

Challenges: Interoperation among heterogenous applications.

Exploit quantum mechanical properites to generate secret keys:

Perfectly secure: Heisenberg's uncertainty principle

Challenges: Transmission distance and encryption rate limitations

Quantum information

Introduction

Qubit:

1 Qubit = Unit of quantum information stored in a single photon, electron, ion,...

One day we might use Qubits to communicate and compute in ways that defy our fantasy.

Measurements:

Measurements randomly project Qubits.

When observing from a certain point of view, two measurements can occur with random probabilities.

Entanglement:

Pairs can be entangled

Quantum entanglement is a physical phenomenon that occurs when pairs or groups of particles are generated or interact in ways

such that the quantum state of each particle cannot be described independently - instead,

a quantum state may be given for the system as a whole.

Near future

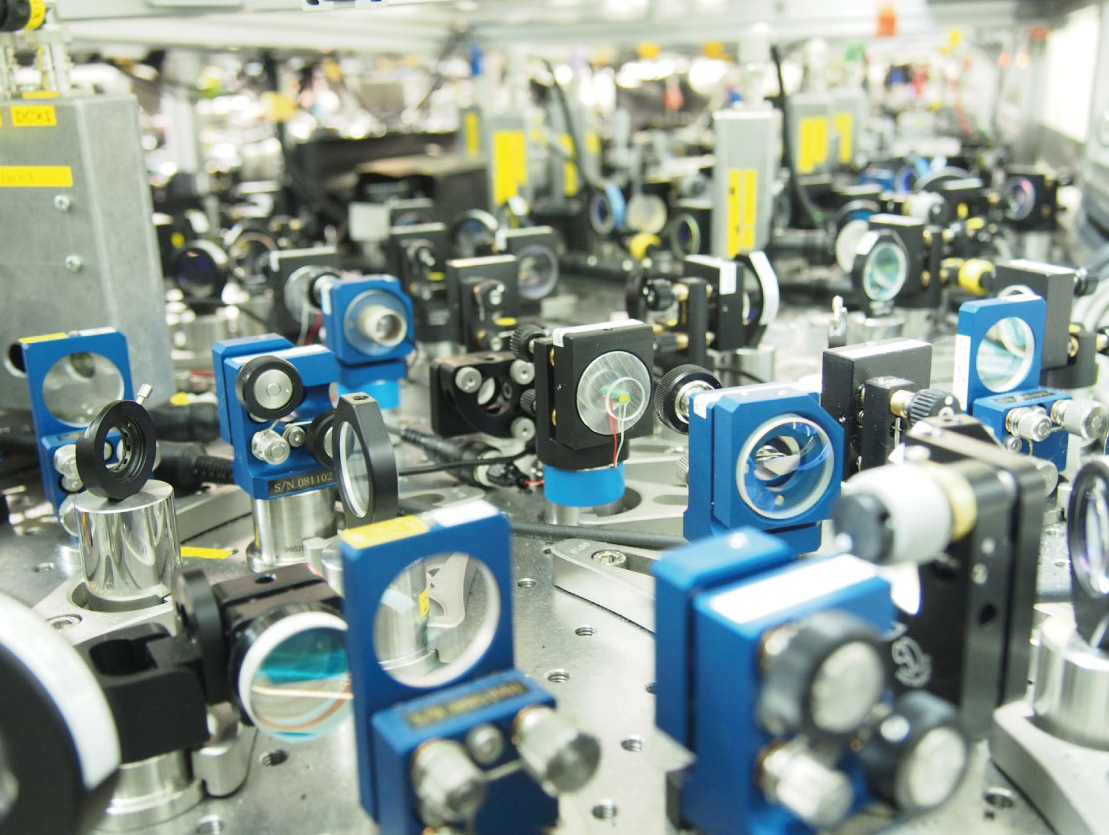

Today photonic Qubits are teleported on 10-100 km and used in quantum crypto. Quantum networks are envisioned.Quantum networks form an important element of quantum computing and quantum cryptography systems. Quantum networks allow for the transportation of quantum information between physically separate quantum systems. Secure communication can be implemented using quantum networks through quantum key distribution algorithms.

Optical quantum networks using fiber optic links or free-space links play an important role transmitting quantum states in the form of photons across large distances. Optical cavities can be used to trap single atoms and can serve as storage and processing nodes in these networks.

Energy harvesting

Wireless devices equipped with energy harvesters enable communicating continuously in a sustainable way

Key components of an energy harvesting system

An energy harvesting system generally requires an energy source such as vibration, heat, light or air flow and three other key electronic components, including:- An energy conversion device that can translate the energy into electrical form

- An energy harvesting module that captures, stores and manages power for the device

- An end application that for example can enable a wireless sensor network, or control and monitor devices

Common sources of energy harvesting

- Mechanical energy - from sources such as vibration, mechanical stress and strain

- Thermal energy - waste energy from furnaces, heaters, and friction sources

- Light energy - captured from sunlight or room light via photo sensors, photo diodes, or solar panels

- Electromagnetic energy - from inductors, coils and transformers

- Natural energy - from the environment such as wind, water flow, ocean currents, and solar

- Human body - a combination of mechanical and thermal energy naturally generated from bio-organisms or through actions such as walking and sitting

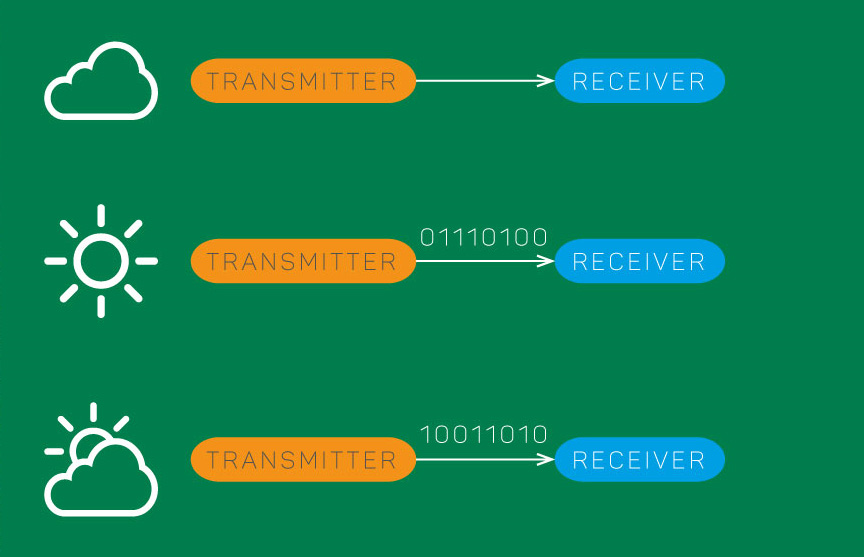

Communication rate

The transmitter can send data by storing harvested energy and using it efficiently to maximize reliable communication rate.

Possible application

in near future...- Space exploration powered by solar radiation

- Wireless sensor networks deployed in rivers to predict floods

- Wireless sensor networks for monitoring animal behaviour

- RFID tag inventory systems

- Solar powered mobile phone

- Intravascular sensors and robotic nano devices

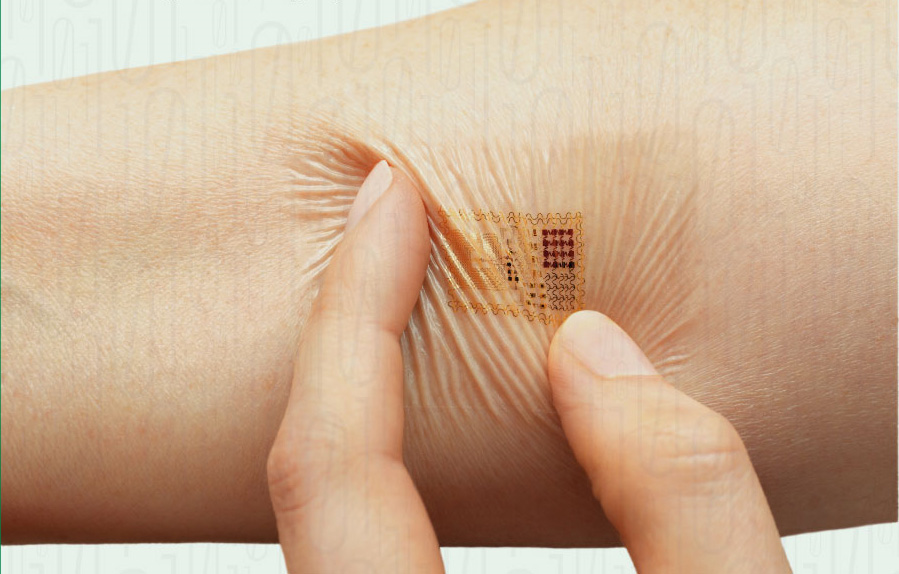

- Biostamps for medical monitoring

- RFID tag inventory systems

Challenges

- Limited energy storage capacity per device

- Limited energy harvesting efficiency

- Imperfections in energy storage, e.g. leakage, capacity loss, etc.

- Accuracy of the prediction of the harvested energy

- Adapting to unexpected changes in the available energy

The content and images of the page was distributed by IEEE Information Theory Society.