Articulated Hand Posture Recognition System

Guides : Prof. Amitabh Mukherjee & Dr. Prithwijit Guha

Sourav Khandelwal

Description :

Manipulating the robotic hand to carry out different tasks requires the robot system to learn hand manipulation skills by observering human demonstrator. The paper describes a vision based articulated hand posture recognition system which serves as an interface in robotic system. It uses Inner Distance Shape Context(IDSC) as a hand shape representation to capture various postures in hand state. IDSC descriptor is a shape context descriptor used to measure the similarity and point correspondences between shapes. It calculates a histogram of contour points in the log-polar space that describes each point along the objects' contour wrt all the other points in terms of distance and angle. Suppose there are n points on the contour of a shape. For the point pi, the coarse histogram hi of the relative coordinates of the remaining n-1 points is defined to be the shape context of pi.

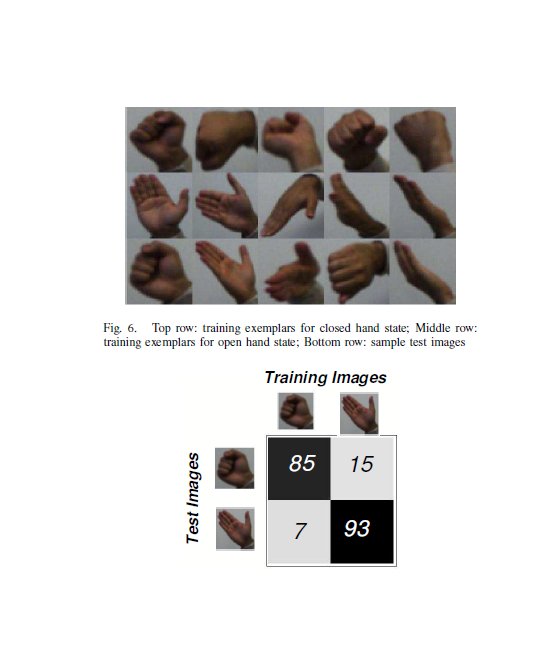

Algorithm : The palm region is segmented from a series of hand posture images using skin color segmentation. The set of segmented images is divided into two sets: Training set and Test set. The images are then passed through a pre-processing step to group the training and test images into different bins on the basis of their primary orientation. This is estimated by computing the scatter direction of the images through Principle Component Analysis(PCA). After the pre-processing step is done, it calculates the IDSC signnatues of the segmented images. This returns a histogram description of each point along the objects' contour to describe other points in the contour wrt to distance and angle. These signatures are used as feature representation for input to address the hand state classification problem. The paper talks about two kinds of matching problem:

- Two class pattern matching problem which essentially finds if the hand posture is open or closed. This is done using SVM.

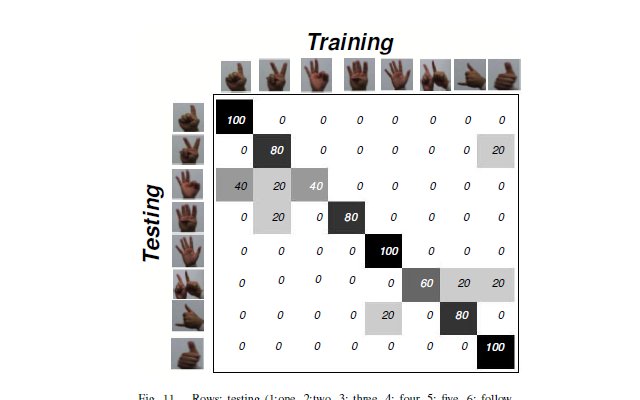

- Sign language pattern matching: This uses solving N class pattern matching problem. Therefore, N SVMs were used in one-against-all configurations.

|

|

References :

- Raghuraman Gopalan and Behzad Dariush [2009], "Toward a vision based hand gesture interface for robotic grasping"

IEEE/RSJ International Conference on Intelligent Robots and Systems , 2009