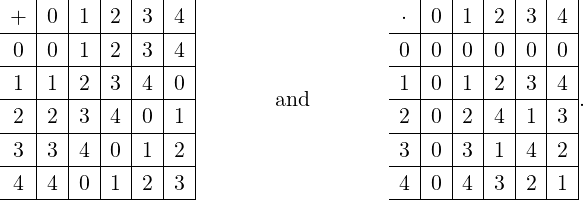

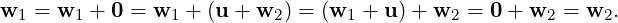

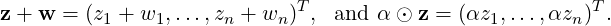

In this chapter, we will mainly be concerned with finite dimensional vector spaces over ℝ or ℂ. Please note that the real and complex numbers have the property that any pair of elements can be added, subtracted or multiplied. Also, division is allowed by a nonzero element. Such sets in mathematics are called field. So, ℝ and ℂ are examples of field. The fields ℝ and ℂ have infinite number of elements. But, in mathematics, we do have fields that have only finitely many elements. For example, consider the set ℤ5 = {0,1,2,3,4}. In ℤ5, we respectively, define addition and multiplication, as

Thus, ℤ5 indeed behaves like a field. So, in this chapter, F will represent a field.

Let A ∈ Mm,n(F) and let V denote the solution set of the homogeneous system Ax = 0. Then, by Theorem 2.1.9, V satisfies:

DRAFT

DRAFT

That is, the solution set of a homogeneous linear system satisfies some nice properties. The Euclidean plane, ℝ2, and the Euclidean space, ℝ3, also satisfy the above properties. In this chapter, our aim is to understand sets that satisfy such properties. We start with the following definition.

Definition 3.1.1. [Vector Space] A vector space V over F, denoted V(F) or in short V (if the field F is clear from the context), is a non-empty set, satisfying the following conditions:

DRAFT

DRAFT

Remark 3.1.2. [Real / Complex Vector Space]

DRAFT

DRAFT

Some interesting consequences of Definition 3.1.1 is stated next. Intuitively, they seem obvious but for better understanding of the given conditions, it is desirable to go through the proof.

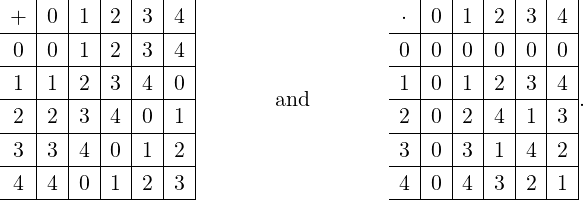

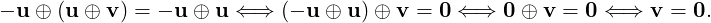

Proof. Part 1: By Condition 3.1.1.1d, for each u ∈ V there exists -u ∈ V such that -u ⊕ u = 0. Hence, u ⊕ v = u is equivalent to

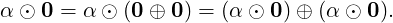

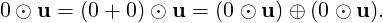

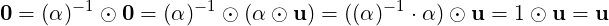

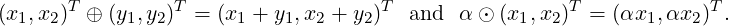

Part 2: As 0 = 0 ⊕ 0, using Condition 3.1.1.3, we have

DRAFT

DRAFT

Now suppose α⊙u = 0. If α = 0 then the proof is over. Therefore, assume that α≠0,α ∈ F. Then, (α)-1 ∈ F and

Part 3: As 0 = 0 ⋅ u = (1 + (-1))u = u ⊕ (-1) ⋅ u, one has (-1) ⋅ u = -u. _

Example 3.1.4. The readers are advised to justify the statements given below.

DRAFT

DRAFT

Recall that the symbol i represents the complex number  .

.

([a,b], ℝ) = {f : [a,b] → ℝ|f is continuous}. Then,

([a,b], ℝ) = {f : [a,b] → ℝ|f is continuous}. Then,  ([a,b], ℝ)

with (f + αg)(x) = f(x) + αg(x), for all x ∈ [a,b], is a real vector space.

([a,b], ℝ)

with (f + αg)(x) = f(x) + αg(x), for all x ∈ [a,b], is a real vector space.

(ℝ, ℝ) = {f : ℝ → ℝ|f is continuous}. Then,

(ℝ, ℝ) = {f : ℝ → ℝ|f is continuous}. Then,  (ℝ, ℝ) is a real vector space, where

(f + αg)(x) = f(x) + αg(x), for all x ∈ ℝ.

(ℝ, ℝ) is a real vector space, where

(f + αg)(x) = f(x) + αg(x), for all x ∈ ℝ.

DRAFT

DRAFT

2((a,b), ℝ) = {f : (a,b) → ℝ|f′′ is continuous}. Then,

2((a,b), ℝ) = {f : (a,b) → ℝ|f′′ is continuous}. Then,  2((a,b), ℝ) with

(f + αg)(x) = f(x) + αg(x), for all x ∈ (a,b), is a real vector space.

2((a,b), ℝ) with

(f + αg)(x) = f(x) + αg(x), for all x ∈ (a,b), is a real vector space.

∞((a,b), ℝ) = {f : (a,b) → ℝ|f is infinitely differentiable}.

Then,

∞((a,b), ℝ) = {f : (a,b) → ℝ|f is infinitely differentiable}.

Then,  ∞((a,b), ℝ) with (f + αg)(x) = f(x) + αg(x), for all x ∈ (a,b) is a real vector

space.

∞((a,b), ℝ) with (f + αg)(x) = f(x) + αg(x), for all x ∈ (a,b) is a real vector

space.

Note that the in the last few examples we can replace ℝ by ℂ to get corresponding complex vector spaces.

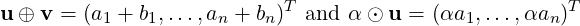

+ anxn|ai ∈ ℝ, for 0 ≤ i ≤ n}. Now, let p(x),q(x) ∈ ℝ[x]. Then, we

can choose m such that p(x) = a0 + a1x +

+ anxn|ai ∈ ℝ, for 0 ≤ i ≤ n}. Now, let p(x),q(x) ∈ ℝ[x]. Then, we

can choose m such that p(x) = a0 + a1x +  + amxm and q(x) = b0 + b1x +

+ amxm and q(x) = b0 + b1x +  + bmxm, where

some of the ai’s or bj’s may be zero. Then, we define

+ bmxm, where

some of the ai’s or bj’s may be zero. Then, we define

+ (αam)xm, for α ∈ ℝ. With the operations defined above

(called component wise addition and multiplication), it can be easily verified that ℝ[x] forms a

real vector space.

+ (αam)xm, for α ∈ ℝ. With the operations defined above

(called component wise addition and multiplication), it can be easily verified that ℝ[x] forms a

real vector space.

+ anxn|ai ∈ ℂ, for 0 ≤ i ≤ n}. Then, component wise addition and

multiplication, the set ℂ[x] forms a complex vector space. One can also look at ℂ[x;n], the set of

complex polynomials of degree less than or equal to n. Then, ℂ[x;n] forms a complex vector

space.

+ anxn|ai ∈ ℂ, for 0 ≤ i ≤ n}. Then, component wise addition and

multiplication, the set ℂ[x] forms a complex vector space. One can also look at ℂ[x;n], the set of

complex polynomials of degree less than or equal to n. Then, ℂ[x;n] forms a complex vector

space.

DRAFT

DRAFT

DRAFT

DRAFT

⁄∈ ℚ, these real

numbers are vectors but not scalars in this space.

⁄∈ ℚ, these real

numbers are vectors but not scalars in this space.

,i ⁄∈ ℚ, these complex numbers are

vectors but not scalars in this space.

,i ⁄∈ ℚ, these complex numbers are

vectors but not scalars in this space.

Note that all our vector spaces, except the last three, are linear spaces.

From now on, we will use ‘u + v’ for ‘u ⊕ v’ and ‘αu or α ⋅ u’ for ‘α ⊙ u’.

![{ [ ] }

a b

c d |a,b,c,d ∈ ℂ, a+ c = 0](LA757x.png) .

.

![{ [ ] }

a b -

c d |a = b,a,b,c,d ∈ ℂ](LA758x.png) .

.

DRAFT

DRAFT

Definition 3.1.7. [Vector Subspace] Let V be a vector space over F. Then, a non-empty subset S of V is called a subspace of V if S is also a vector space with vector addition and scalar multiplication inherited from V.

DRAFT

DRAFT

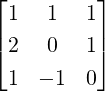

![[ ]

1 1 1

1 - 1 - 1](LA763x.png) x = 0} is a line in ℝ3 containing 0 (hence a subspace).

x = 0} is a line in ℝ3 containing 0 (hence a subspace).

2(a,b) is a subspace of

2(a,b) is a subspace of  (a,b).

(a,b).

Let V(F) be a vector space and W ⊆ V, W≠∅. We now prove a result which implies that to check W to be a subspace, we need to verify only one condition.

Theorem 3.1.9. Let V(F) be a vector space and W ⊆ V, W≠∅. Then, W is a subspace of V if and only if αu + βv ∈ W whenever α,β ∈ F and u,v ∈ W.

Proof. Let W be a subspace of V and let u,v ∈ W. Then, for every α,β ∈ F, αu,βv ∈ W and hence αu + βv ∈ W.

Now, we assume that αu + βv ∈ W, whenever α,β ∈ F and u,v ∈ W. To show, W is a subspace of

V:

DRAFT

DRAFT

Thus, one obtains the required result. _

([-1,1])?

([-1,1])?

DRAFT

DRAFT

Definition 3.1.11. [Linear Combination] Let V be a vector space over F. Then, for any

u1,…,un ∈ V and α1,…,αn ∈ F, the vector α1u1 +  + αnun = ∑

i=1nαiui is said to be a

linear combination of the vectors u1,…,un.

+ αnun = ∑

i=1nαiui is said to be a

linear combination of the vectors u1,…,un.

DRAFT

DRAFT

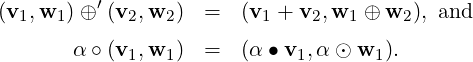

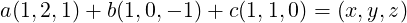

| (3.1.1) |

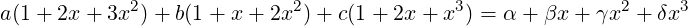

in the variables a,b,c ∈ ℝ has a solution. Clearly, Equation (3.1.1) has solution a = 9,b = -10 and c = 5.

| (3.1.2) |

in the variables a,b,c ∈ ℝ has a solution. Verify that the system has no solution. Thus, 4 + 5x + 5x2 + x3 is not a linear combination of the given set of polynomials.

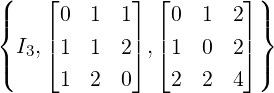

a linear combination of the vectors I

3,

a linear combination of the vectors I

3, and

and  ?

?

= I

3 + 2

= I

3 + 2 +

+  . Hence, it is indeed a linear

combination of given vectors of M3(ℝ).

. Hence, it is indeed a linear

combination of given vectors of M3(ℝ).

DRAFT

DRAFT

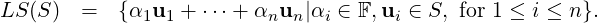

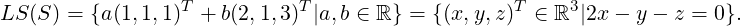

Definition 3.1.14. [Linear Span] Let V be a vector space over F and S ⊆ V. Then, the linear span of S, denoted LS(S), is defined as

DRAFT

DRAFT

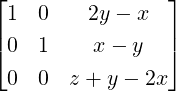

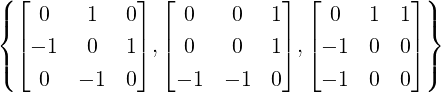

Example 3.1.15. For the set S given below, determine LS(S).

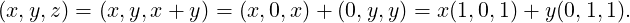

. Thus,

the required condition on x,y and z is given by z + y - 2x = 0. Hence,

. Thus,

the required condition on x,y and z is given by z + y - 2x = 0. Hence,

DRAFT

DRAFT

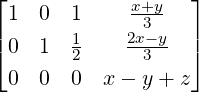

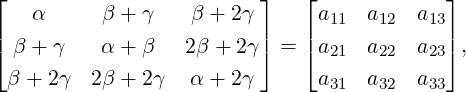

| (3.1.3) |

in the variables a,b,c is always consistent. An application of GJE to Equation (3.1.3) gives

. Thus, LS(S) = {(x,y,z)T

∈ ℝ3|x - y + z = 0}.

. Thus, LS(S) = {(x,y,z)T

∈ ℝ3|x - y + z = 0}.

![LS (S) = {α + βx + γx2 + δx3 ∈ ℝ[x]|α + β - γ - 3δ = 0}.](LA793x.png)

⊆ M3(ℝ).

⊆ M3(ℝ).

DRAFT

DRAFT

![T a22-+-a33 --a13

LS (S) = {A = [aij] ∈ M3(ℝ )|A = A ,a11 = 2 ,

a22 - a33 + 3a13 a22 - a33 + 3a13}

a12 = -------4-------,a23 = -------2------- .](LA798x.png)

Exercise 3.1.16. For each S, determine the equation of the geometrical object that LS(S) represents?

DRAFT

DRAFT

⊆ M3(ℝ).

⊆ M3(ℝ).

Definition 3.1.17. [Finite Dimensional Vector Space] Let V be a vector space over F. Then, V is called finite dimensional if there exists S ⊆ V, such that S has finite number of elements and V = LS(S). If such an S does not exist then V is called infinite dimensional.

DRAFT

DRAFT

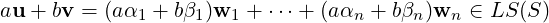

Lemma 3.1.19 (Linear Span is a Subspace). Let V be a vector space over F and S ⊆ V. Then, LS(S) is a subspace of V.

Proof. By definition, 0 ∈ LS(S). So, LS(S) is non-empty. Let u,v ∈ LS(S). To show,

au + bv ∈ LS(S) for all a,b ∈ F. As u,v ∈ LS(S), there exist n ∈ ℕ, vectors wi ∈ S and scalars

αi,βi ∈ F such that u = α1w1 +  + αnwn and v = β1w1 +

+ αnwn and v = β1w1 +  + βnwn. Hence,

+ βnwn. Hence,

Exercise 3.1.20. Let V be a vector space over F and W ⊆ V.

DRAFT

DRAFT

Theorem 3.1.21. Let V be a vector space over F and S ⊆ V. Then, LS(S) is the smallest subspace of V containing S.

Proof. For every u ∈ S, u = 1 ⋅u ∈ LS(S). Thus, S ⊆ LS(S). Need to show that LS(S) is the smallest subspace of V containing S. So, let W be any subspace of V containing S. Then, by Exercise 3.1.20, LS(S) ⊆ W and hence the result follows. _

Definition 3.1.22. [Sum of two subsets] Let V be a vector space over F.

![{ [ ] }

1

1](LA809x.png) and T =

and T = ![{[ ]}

- 1

1](LA810x.png) then S + T =

then S + T = ![{ [ ]}

0

2](LA811x.png) .

.

![{ [ ]}

1

1](LA812x.png) and T = LS

and T = LS![( [ ])

- 1

1](LA813x.png) then S +T =

then S +T = ![{ [ ] [ ] }

1 - 1

+ c |c ∈ ℝ

1 1](LA814x.png) .

. (1,2) +

(1,2) +  (2,1).

(2,1).We leave the proof of the next result for readers.

DRAFT

Lemma 3.1.23. Let P and Q be two subspaces of a vector space V over F. Then, P + Q is a

subspace of V. Furthermore, P + Q is the smallest subspace of V containing both P and Q.

DRAFT

Lemma 3.1.23. Let P and Q be two subspaces of a vector space V over F. Then, P + Q is a

subspace of V. Furthermore, P + Q is the smallest subspace of V containing both P and Q.

![{ [ ] }

a b

|a,b,c ∈ ℝ

c 0](LA819x.png) and W =

and W = ![{ [ ] }

a 0

|a,d ∈ ℝ

0 d](LA820x.png) be subspaces of M2(ℝ).

Determine U ∩ W. Is M2(ℝ) = U ∪ W? What is U + W?

be subspaces of M2(ℝ).

Determine U ∩ W. Is M2(ℝ) = U ∪ W? What is U + W?

DRAFT

DRAFT

Let V be a vector space over either ℝ or ℂ. Then, we have learnt the following:

Therefore, the following questions arise:

We try to answer these questions in the subsequent sections. Before doing so, we give a short section on fundamental subspaces associated with a matrix.

Definition 3.2.1. [Fundamental Subspaces] Let A ∈ Mm,n(ℂ). Then, we define the four

fundamental subspaces associated with A as

DRAFT

DRAFT

Remark 3.2.2. Let A ∈ Mm,n(ℂ).

. Then, verify that

. Then, verify that

DRAFT

DRAFT

. Then,

. Then,

Remark 3.2.4. Let A ∈ Mm,n(ℝ). Then, in Example 3.2.3, observe that the direction ratios of normal vectors of Col(A) matches with vector in Null(AT ). Similarly, the direction ratios of normal vectors of Row(A) matches with vectors in Null(A). Are these true in the general setting? Do similar relations hold if A ∈ Mm,n(ℂ)? We will come back to these spaces again and again.

. Then, determine Col(A), Row(A), Null(A) and Null(AT

).

. Then, determine Col(A), Row(A), Null(A) and Null(AT

).

DRAFT

DRAFT

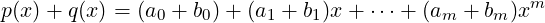

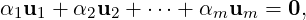

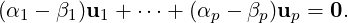

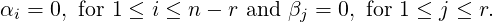

Definition 3.3.1. [Linear Independence and Dependence] Let S = {u1,…,um} be a non-empty subset of a vector space V over F. Then, S is said to be linearly independent if the linear system

| (3.3.1) |

in the variables αi’s, 1 ≤ i ≤ m, has only the trivial solution. If Equation (3.3.1) has a non-trivial solution then S is said to be linearly dependent.

If S has infinitely many vectors then S is said to be linearly independent if for every finite subset T of S, T is linearly independent.

Observe that we are solving a linear system over F. Hence, linear independence and dependence depend on F, the set of scalars.

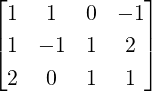

![[ ]

1+ 2x + x2 2 + x+ 4x2 3 + 3x + 5x2](LA833x.png)

= 0,

= 0,

DRAFT

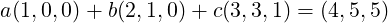

or equivalently a(1 + 2x + x2) + b(2 + x + 4x2) + c(3 + 3x + 5x2) = 0, in the variables

a,b and c. As two polynomials are equal if and only if their coefficients are equal,

the above system reduces to the homogeneous system a + 2b + 3c = 0,2a + b + 3c =

0,a+4b+5c = 0. The corresponding coefficient matrix has rank 2 < 3, the number of

variables. Hence, the system has a non-trivial solution. Thus, S is a linearly dependent

subset of ℝ[x;2].

DRAFT

or equivalently a(1 + 2x + x2) + b(2 + x + 4x2) + c(3 + 3x + 5x2) = 0, in the variables

a,b and c. As two polynomials are equal if and only if their coefficients are equal,

the above system reduces to the homogeneous system a + 2b + 3c = 0,2a + b + 3c =

0,a+4b+5c = 0. The corresponding coefficient matrix has rank 2 < 3, the number of

variables. Hence, the system has a non-trivial solution. Thus, S is a linearly dependent

subset of ℝ[x;2].

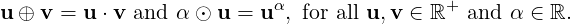

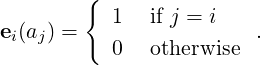

([-π,π], ℝ) over ℝ as the

system

([-π,π], ℝ) over ℝ as the

system

![⌊a⌋

[ ]| |

1 sin(x) cos(x) ⌈b⌉ = 0 ⇔ a⋅1 + b⋅sin(x)+ c ⋅cos(x) = 0,

c](LA837x.png) | (3.3.2) |

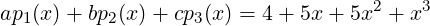

in the variables a,b and c has only the trivial solution. To verify this, evaluate Equation (3.3.2)

at - ,0 and

,0 and  to get the homogeneous system a-b = 0,a + c = 0,a + b = 0. Clearly, this

system has only the trivial solution.

to get the homogeneous system a-b = 0,a + c = 0,a + b = 0. Clearly, this

system has only the trivial solution.

![[ ]

(0,1,1) (1,1,0) (1,0,1)](LA840x.png)

= (0,0,0) in the variables

a,b and c. As rank of coefficient matrix is 3 = the number of variables, the

system has only the trivial solution. Hence, S is a linearly independent subset of

ℝ3.

= (0,0,0) in the variables

a,b and c. As rank of coefficient matrix is 3 = the number of variables, the

system has only the trivial solution. Hence, S is a linearly independent subset of

ℝ3.

DRAFT

DRAFT

![[ ]

C

0](LA842x.png) . Thus,

0T = (PA)[m,:] = ∑

i=1mpmiA[i,:]. As P is invertible, at least one pmi≠0. Thus, the required

result follows.

. Thus,

0T = (PA)[m,:] = ∑

i=1mpmiA[i,:]. As P is invertible, at least one pmi≠0. Thus, the required

result follows.

![[ ]

B 0](LA845x.png) . Thus, 0 = (AQ)[:,n] = ∑

i=1nqinA[:,i]. As Q is invertible, at least one qin≠0.

Thus, the required result follows.

. Thus, 0 = (AQ)[:,n] = ∑

i=1nqinA[:,i]. As Q is invertible, at least one qin≠0.

Thus, the required result follows.

The reader is expected to supply the proof of parts that are not given.

Proof. Let 0 ∈ S. Then, 1 ⋅ 0 = 0. That is, a non-trivial linear combination of some vectors in S is 0. Thus, the set S is linearly dependent. _

We now prove a couple of results which will be very useful in the next section.

DRAFT

Proposition 3.3.4. Let S be a linearly independent subset of a vector space V over F. If

T1,T2 are two subsets of S such that T1 ∩ T2 = ∅ then, LS(T1) ∩ LS(T2) = {0}. That is, if

v ∈ LS(T1) ∩ LS(T2) then v = 0.

DRAFT

Proposition 3.3.4. Let S be a linearly independent subset of a vector space V over F. If

T1,T2 are two subsets of S such that T1 ∩ T2 = ∅ then, LS(T1) ∩ LS(T2) = {0}. That is, if

v ∈ LS(T1) ∩ LS(T2) then v = 0.

Proof. Let v ∈ LS(T1) ∩LS(T2). Then, there exist vectors u1,…,uk ∈ T1, w1,…,wℓ ∈ T2 and scalars αi’s and βj’s (not all zero) such that v = ∑ i=1kαiui and v = ∑ j=1ℓβjwj . Thus, we see that ∑ i=1kαiui + ∑ j=1ℓ(-βj)wj = 0. As the scalars αi’s and βj’s are not all zero, we see that a non-trivial linear combination of some vectors in T1 ∪ T2 ⊆ S is 0. This contradicts the assumption that S is a linearly independent subset of V. Hence, each of α’s and βj’s is zero. That is v = 0. _

We now prove another useful result.

Theorem 3.3.5. Let S = {u1,…,uk} be a non-empty subset of a vector space V over F. If T ⊆ LS(S) having more than k vectors then, T is a linearly dependent subset in V.

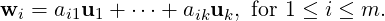

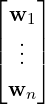

Proof. Let T = {w1,…,wm}. As wi ∈ LS(S), there exist aij ∈ F such that

DRAFT

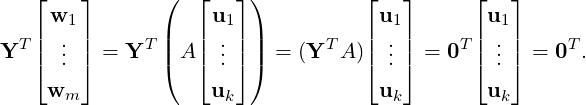

m > k, using Corollary 2.2.42, the linear system xT A = 0T has a non-trivial solution, say Y≠0T . That

is, YT A = 0T . Thus,

DRAFT

m > k, using Corollary 2.2.42, the linear system xT A = 0T has a non-trivial solution, say Y≠0T . That

is, YT A = 0T . Thus,

Proof. Observe that ℝn = LS({e1,…,en}), where ei = In[:,i], is the i-th column of In. Hence, using Theorem 3.3.5, the required result follows. _

Theorem 3.3.7. Let S be a linearly independent subset of a vector space V over F. Then, for any v ∈ V the set S ∪{v} is linearly dependent if and only if v ∈ LS(S).

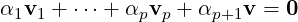

Proof. Let us assume that S ∪{v} is linearly dependent. Then, there exist vi’s in S such that the linear system

| (3.3.3) |

in the variables αi’s has a non-trivial solution, say αi = ci, for 1 ≤ i ≤ p + 1. We claim that cp+1≠0.

For, if cp+1 = 0 then, Equation (3.3.3) has a non-trivial solution corresponds to having a

non-trivial solution of the linear system α1v1 +  + αpvp = 0 in the variables α1,…,αp. This

contradicts Proposition 3.3.3.2 as {v1,…,vp}⊆ S, a linearly independent set. Thus, cp+1≠0 and we

get

+ αpvp = 0 in the variables α1,…,αp. This

contradicts Proposition 3.3.3.2 as {v1,…,vp}⊆ S, a linearly independent set. Thus, cp+1≠0 and we

get

∈ F, for 1 ≤ i ≤ p. That is, v is a linear combination of v1,…,vp.

∈ F, for 1 ≤ i ≤ p. That is, v is a linear combination of v1,…,vp.

Now, assume that v ∈ LS(S). Then, there exists vi ∈ S and ci ∈ F, not all zero, such that

v = ∑

i=1pcivi. Thus, the linear system α1v1 +  + αpvp + αp+1v = 0 in the variables αi’s has a

non-trivial solution [c1,…,cp,-1]. Hence, S ∪{v} is linearly dependent. _

+ αpvp + αp+1v = 0 in the variables αi’s has a

non-trivial solution [c1,…,cp,-1]. Hence, S ∪{v} is linearly dependent. _

We now state a very important corollary of Theorem 3.3.7 without proof. This result can also be used as an alternative definition of linear independence and dependence.

Corollary 3.3.8. Let V be a vector space over F and let S be a subset of V containing a non-zero vector u1.

DRAFT

DRAFT

We start with our understanding of the RREF.

Theorem 3.3.9. Let A ∈ Mm,n(ℂ). Then, the rows of A corresponding to the pivotal rows of RREF(A) are linearly independent. Also, the columns of A corresponding to the pivotal columns of RREF(A) are linearly independent.

Proof. Let RREF(A) = B. Then, the pivotal rows of B are linearly independent due to the pivotal 1’s. Now, let B1 be the submatrix of B consisting of the pivotal rows of B. Also, let A1 be the submatrix of A whose rows corresponds to the rows of B1. As the RREF of a matrix is unique (see Corollary 2.2.18) there exists an invertible matrix Q such that QA1 = B1. So, if there exists c≠0 such that cT A1 = 0T then

Let B[:,i1],…,B[:,ir] be the pivotal columns of B. Then, they are linearly independent due to pivotal 1’s. As B = RREF(A), there exists an invertible matrix P such that B = PA. Then, the corresponding columns of A satisfy

![[A [:,i1],...,A[:,ir]] = [P-1B [:,i1],...,P -1B[:,ir]] = P -1[B [:,i1],...,B [:,ir]].](LA863x.png)

DRAFT

is invertible, the systems [A[:,i1],…,A[:,ir]]

DRAFT

is invertible, the systems [A[:,i1],…,A[:,ir]] = 0 and [B[:,i1],…,B[:,ir]]

= 0 and [B[:,i1],…,B[:,ir]] = 0 are

row-equivalent. Thus, they have the same solution set. Hence, {A[:,i1],…,A[:,ir]} is linearly

independent if and only if {B[:,i1],…,B[:,ir]} is linear independent. Thus, the required result

follows. _

= 0 are

row-equivalent. Thus, they have the same solution set. Hence, {A[:,i1],…,A[:,ir]} is linearly

independent if and only if {B[:,i1],…,B[:,ir]} is linear independent. Thus, the required result

follows. _

The next result follows directly from Theorem 3.3.9 and hence the proof is left to readers.

Corollary 3.3.10. The following statements are equivalent for A ∈ Mn(ℂ).

We give an example for better understanding.

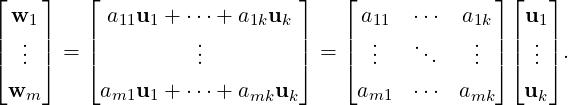

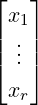

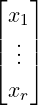

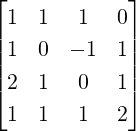

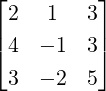

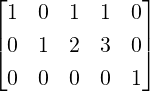

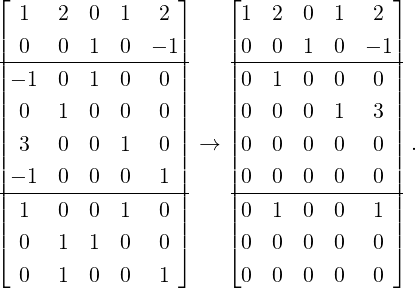

Example 3.3.11. Let A =  with RREF(A) = B =

with RREF(A) = B =  .

.

DRAFT

DRAFT

We end this section with a result that states that linear combination with respect to linearly independent set is unique.

Lemma 3.3.12. Let S be a linearly independent subset of a vector space V over F. Then, each v ∈ LS(S) is a unique linear combination of vectors from S.

Proof. Suppose there exists v ∈ LS(S) with v ∈ LS(T1),LS(T2) with T1,T2 ⊆ S. Let

T1 = {v1,…,vk} and T2 = {w1,…,wℓ}, for some vi’s and wj’s in S. Define T = T1 ∪T2. Then, T is a

subset of S. Hence, using Proposition 3.3.3, the set T is linearly independent. Let T = {u1,…,up}.

Then, there exist αi’s and βj’s in F, not all zero, such that v = α1u1 +  + αpup as well as

v = β1u1 +

+ αpup as well as

v = β1u1 +  + βpup. Equating the two expressions for v gives

+ βpup. Equating the two expressions for v gives

| (3.3.4) |

As T is a linearly independent subset of V, the system c1v1 +  + cpvp = 0, in the variables c1,…,cp,

has only the trivial solution. Thus, in Equation (3.3.4), αi -βi = 0, for 1 ≤ i ≤ p. Thus, for 1 ≤ i ≤ p,

αi = βi and the required result follows. _

+ cpvp = 0, in the variables c1,…,cp,

has only the trivial solution. Thus, in Equation (3.3.4), αi -βi = 0, for 1 ≤ i ≤ p. Thus, for 1 ≤ i ≤ p,

αi = βi and the required result follows. _

DRAFT

DRAFT

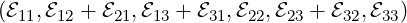

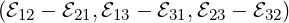

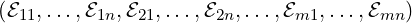

ij|1 ≤ i ≤ m,1 ≤ j ≤ n} a linearly independent subset of the vector space

Mm,n(ℂ) over ℂ (see Definition 1.3.1.1)?

ij|1 ≤ i ≤ m,1 ≤ j ≤ n} a linearly independent subset of the vector space

Mm,n(ℂ) over ℂ (see Definition 1.3.1.1)?

= A

= A for some matrix A ∈ Mn(ℂ).

for some matrix A ∈ Mn(ℂ).

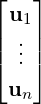

![⌊ ⌋ ( ⌊ ⌋ ) ⌊ ⌋

u1 u1 w1

0T = [α ,...,α ]|| .. || = ([α ,...,α ]A- 1)||A || ..|| || = ([α ,...,α ]A -1)|| .. ||.

1 n⌈ . ⌉ 1 n ( ⌈ .⌉ ) 1 n ⌈ . ⌉

un un wn

<img

src=](LA880x.png)

DRAFT

" class="math-display" >

DRAFT

" class="math-display" >

DRAFT

DRAFT

. Determine x,y,z ∈ ℝ3

\{0} such that Ax = 6x, Ay = 2y and

Az = -2z. Use the vectors x,y and z obtained above to prove the following.

. Determine x,y,z ∈ ℝ3

\{0} such that Ax = 6x, Ay = 2y and

Az = -2z. Use the vectors x,y and z obtained above to prove the following.

Definition 3.4.1. [Maximality] Let S be a subset of a set T. Then, S is said to be a maximal subset of T having property P if

Example 3.4.2. Let T = {2,3,4,7,8,10,12,13,14,15}. Then, a maximal subset of T of

consecutive integers is S = {2,3,4}. Other maximal subsets are {7,8},{10} and {12,13,14,15}.

Note that {12,13} is not maximal. Why?

DRAFT

DRAFT

Definition 3.4.3. [Maximal linearly independent set] Let V be a vector space over F. Then, S is called a maximal linearly independent subset of V if

ij|1 ≤ i ≤ m,1 ≤ j ≤ n} a maximal linearly independent subset of Mm,n(ℂ)

over ℂ?

ij|1 ≤ i ≤ m,1 ≤ j ≤ n} a maximal linearly independent subset of Mm,n(ℂ)

over ℂ?

DRAFT

Theorem 3.4.5. Let V be a vector space over F and S a linearly independent set in V. Then,

S is maximal linearly independent if and only if LS(S) = V.

DRAFT

Theorem 3.4.5. Let V be a vector space over F and S a linearly independent set in V. Then,

S is maximal linearly independent if and only if LS(S) = V.

Proof. Let v ∈ V. As S is linearly independent, using Corollary 3.3.8.2, the set S ∪{v} is linearly independent if and only if v ∈ V \ LS(S). Thus, the required result follows. _

Let V = LS(S) for some set S with |S| = k. Then, using Theorem 3.3.5, we see that if T ⊆ V is linearly independent then |T|≤ k. Hence, a maximal linearly independent subset of V can have at most k vectors. Thus, we arrive at the following important result.

Theorem 3.4.6. Let V be a vector space over F and let S and T be two finite maximal linearly independent subsets of V. Then, |S| = |T|.

Proof. By Theorem 3.4.5, S and T are maximal linearly independent if and only if LS(S) = V = LS(T). Now, use the previous paragraph to get the required result. _

Let V be a finite dimensional vector space. Then, by Theorem 3.4.6, the number of vectors in any two maximal linearly independent set is the same. We use this number to define the dimension of a vector space. We do so now.

Definition 3.4.7. [Dimension of a finite dimensional vector space] Let V be a finite dimensional vector space over F. Then, the number of vectors in any maximal linearly independent set is called the dimension of V, denoted dim(V). By convention, dim({0}) = 0.

DRAFT

DRAFT

ij|1 ≤ i ≤ m,1 ≤ j ≤ n} is a maximal linearly independent subset of Mm,n(ℂ) over

ℂ, dim(Mm,n(ℂ)) = mn.

ij|1 ≤ i ≤ m,1 ≤ j ≤ n} is a maximal linearly independent subset of Mm,n(ℂ) over

ℂ, dim(Mm,n(ℂ)) = mn.Definition 3.4.9. Let V be a vector space over F. Then, a maximal linearly independent subset of V is called a basis/Hamel basis of V. The vectors in a basis are called basis vectors. By convention, a basis of {0} is the empty set.

Existence of Hamel basis

Definition 3.4.10. [Minimal Spanning Set] Let V be a vector space over F. Then, a subset S of V is called minimal spanning if LS(S) = V and no proper subset of S spans V.

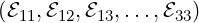

Remark 3.4.11 (Standard Basis). The readers should verify the statements given below.

DRAFT

DRAFT

ii,

ii, ij +

ij +  ji|1 ≤ i < j ≤ n}.

ji|1 ≤ i < j ≤ n}.

ij -

ij - ji|1 ≤ i < j ≤ n}.

ji|1 ≤ i < j ≤ n}.

= {(1,0,0)T ,(1,3,0)T } is a basis of V.

= {(1,0,0)T ,(1,3,0)T } is a basis of V.

DRAFT

DRAFT

= {e1,…,en} is a linearly independent subset of ℝS over ℝ. Is it a

basis of ℝS over ℝ? What can you say if S is a countable set?

= {e1,…,en} is a linearly independent subset of ℝS over ℝ. Is it a

basis of ℝS over ℝ? What can you say if S is a countable set?

(x1,…,xn)

(x1,…,xn) = xi. Then, verify that {e1,…,en} is a linearly

independent subset of ℝS over ℝ. Is it a basis of ℝS over ℝ?

= xi. Then, verify that {e1,…,en} is a linearly

independent subset of ℝS over ℝ. Is it a basis of ℝS over ℝ?

[a,b], where a < b ∈ ℝ. For each α ∈ [a,b], define

[a,b], where a < b ∈ ℝ. For each α ∈ [a,b], define

![fα(x) = x - α, for all x ∈ [a,b].](LA902x.png)

DRAFT

DRAFT

Theorem 3.4.13. Let V be a non-zero vector space over F. Then, the following statements are equivalent.

is a basis (maximal linearly independent subset) of V.

is a basis (maximal linearly independent subset) of V.

is linearly independent and spans V.

is linearly independent and spans V.

is a minimal spanning set in V.

is a minimal spanning set in V.Proof. 1 ⇒2 By definition, every basis is a maximal linearly independent subset of V. Thus,

using Corollary 3.3.8.2, we see that  spans V.

spans V.

2 ⇒3 Let S be a linearly independent set that spans V. As S is linearly independent, for any

x ∈ S, x LS

LS . Hence LS

. Hence LS ⊊ LS(S) = V.

⊊ LS(S) = V.

3 ⇒1 If  is linearly dependent then using Corollary 3.3.8.1,

is linearly dependent then using Corollary 3.3.8.1,  is not minimal spanning. A

contradiction. Hence,

is not minimal spanning. A

contradiction. Hence,  is linearly independent.

is linearly independent.

We now need to show that  is a maximal linearly independent set. Since LS(

is a maximal linearly independent set. Since LS( ) = V, for any

x ∈ V \

) = V, for any

x ∈ V \ , using Corollary 3.3.8.2, the set

, using Corollary 3.3.8.2, the set  ∪{x} is linearly dependent. That is, every proper

superset of

∪{x} is linearly dependent. That is, every proper

superset of  is linearly dependent. Hence, the required result follows. _

is linearly dependent. Hence, the required result follows. _

Now, using Lemma 3.3.12, we get the following result.

Remark 3.4.14. Let  be a basis of a vector space V over F. Then, for each v ∈ V, there

exist unique ui ∈

be a basis of a vector space V over F. Then, for each v ∈ V, there

exist unique ui ∈ and unique αi ∈ F, for 1 ≤ i ≤ n, such that v = ∑

i=1nαiui.

and unique αi ∈ F, for 1 ≤ i ≤ n, such that v = ∑

i=1nαiui.

The next result is generally known as “every linearly independent set can be extended to form a basis of a finite dimensional vector space”.

DRAFT

Theorem 3.4.15. Let V be a vector space over F with dim(V) = n. If S is a linearly

independent subset of V then there exists a basis T of V such that S ⊆ T.

DRAFT

Theorem 3.4.15. Let V be a vector space over F with dim(V) = n. If S is a linearly

independent subset of V then there exists a basis T of V such that S ⊆ T.

Proof. If LS(S) = V, done. Else, choose u1 ∈ V \LS(S). Thus, by Corollary 3.3.8.2, the set S ∪{u1} is linearly independent. We repeat this process till we get n vectors in T as dim(V) = n. By Theorem 3.4.13, this T is indeed a required basis. _

We end this section with an algorithm which is based on the proof of the previous theorem.

This process will finally end as V is a finite dimensional vector space.

= {u1,…,un} be a basis of a vector space V over F. Then, does the condition

∑

i=1nαiui = 0 in αi’s imply that αi = 0, for 1 ≤ i ≤ n?

= {u1,…,un} be a basis of a vector space V over F. Then, does the condition

∑

i=1nαiui = 0 in αi’s imply that αi = 0, for 1 ≤ i ≤ n?

DRAFT

DRAFT

are invertible.

are invertible.

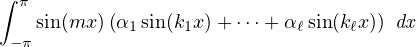

([-π,π]) over ℝ. For each n ∈ ℕ, define en(x) = sin(nx). Then,

prove that S = {en|n ∈ ℕ} is linearly independent. [Hint: Need to show that every finite subset of S

is linearly independent. So, on the contrary assume that there exists ℓ ∈ℕ and functions ek1,…,ekℓ

such that α1ek1 +

([-π,π]) over ℝ. For each n ∈ ℕ, define en(x) = sin(nx). Then,

prove that S = {en|n ∈ ℕ} is linearly independent. [Hint: Need to show that every finite subset of S

is linearly independent. So, on the contrary assume that there exists ℓ ∈ℕ and functions ek1,…,ekℓ

such that α1ek1 +  + αℓekℓ = 0, for some αt≠0 with 1 ≤ t ≤ ℓ. But, the above system is

+ αℓekℓ = 0, for some αt≠0 with 1 ≤ t ≤ ℓ. But, the above system is

DRAFT

equivalent to looking at α1 sin(k1x) +

DRAFT

equivalent to looking at α1 sin(k1x) +  + αℓ sin(kℓx) = 0 for all x ∈ [-π,π]. Now in the

integral

+ αℓ sin(kℓx) = 0 for all x ∈ [-π,π]. Now in the

integral

([-π,π], ℝ) over ℝ?________________________________________________________

([-π,π], ℝ) over ℝ?________________________________________________________

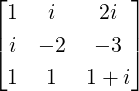

= {(1,0,1)T ,(1,i,0)T ,(1,1,1 - i)T } is a basis of ℂ3 over ℂ.

= {(1,0,1)T ,(1,i,0)T ,(1,1,1 - i)T } is a basis of ℂ3 over ℂ.

given in Example 3.4.16.14.

given in Example 3.4.16.14.

. Find a basis of V = {x ∈ ℝ5

|Ax = 0}.

. Find a basis of V = {x ∈ ℝ5

|Ax = 0}.

DRAFT

DRAFT

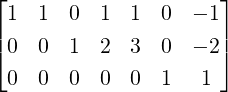

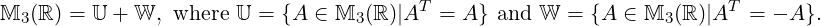

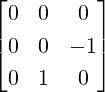

In this section, we will study results that are intrinsic to the understanding of linear algebra from the point of view of matrices, especially the fundamental subspaces (see Definition 3.2.1) associated with matrices. We start with an example.

.

.  . Find a basis and dimension of Null(A).

. Find a basis and dimension of Null(A).

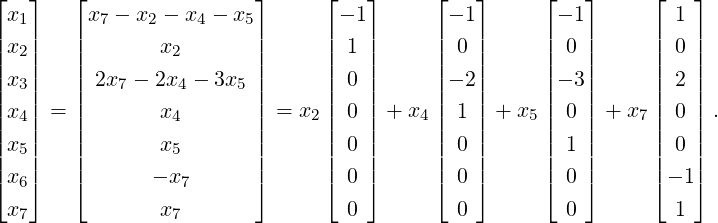

| (3.5.1) |

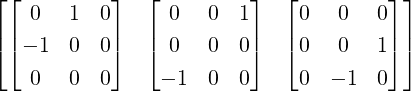

Now, let u1T = ![[ ]

- 1,1,0,0,0,0,0](LA927x.png) , u2T =

, u2T = ![[ ]

- 1,0,- 2,1,0,0,0](LA928x.png) , u3T =

, u3T = ![[ ]

- 1,0,- 3,0,1,0,0](LA929x.png) and

u4T =

and

u4T = ![[ ]

1,0,2,0,0,- 1,1](LA930x.png) . Then, S = {u1,u2,u3,u4} is a basis of Null(A). The reasons for S

to be a basis are as follows:

. Then, S = {u1,u2,u3,u4} is a basis of Null(A). The reasons for S

to be a basis are as follows:

The next result is a re-writing of the results on system of linear equations. We give the proof for the sake of completeness.

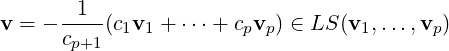

Proof. Part 1a: Let x ∈ Null(A). Then, Bx = EAx = E0 = 0. So, Null(A) ⊆ Null(B).

Further, if x ∈ Null(B), then Ax = (E-1E)Ax = E-1(EA)x = E-1Bx = E-10 = 0. Hence,

Null(B) ⊆ Null(A). Thus, Null(A) = Null(B).

DRAFT

DRAFT

Let us now prove Row(A) = Row(B). So, let xT ∈ Row(A). Then, there exists y ∈ ℂm such that

xT = yT A. Thus, xT =  EA =

EA =  B and hence xG ∈ Row(B). That is,

Row(A) ⊆ Row(B). A similar argument gives Row(B) ⊆ Row(A) and hence the required result

follows.

B and hence xG ∈ Row(B). That is,

Row(A) ⊆ Row(B). A similar argument gives Row(B) ⊆ Row(A) and hence the required result

follows.

Part 1b: E is invertible implies E is invertible and B = EA. Thus, an argument similar to the previous part gives us the required result.

For Part 2, note that B* = E*A* and E* is invertible. Hence, an argument similar to the first part gives the required result. _

Let A ∈ Mm×n(ℂ) and let B = RREF(A). Then, as an immediate application of Lemma 3.5.3, we get dim(Row(A)) = Row rank(A). We now prove that dim(Row(A)) = dim(Col(A)).

Proof. Let dim(Row(A)) = r. Then, there exist i1,…,ir such that {A[i1,:],…,A[ir,:]} forms a basis of

Row(A). Then, B = ![⌊ A[i,:]⌋

| 1. |

|⌈ .. |⌉

A[ir,:]](LA939x.png) is an r × n matrix and it’s rows are a basis of Row(A). Therefore,

there exist αij ∈ ℂ,1 ≤ i ≤ m,1 ≤ j ≤ r such that A[t,:] = [αt1,…,αtr]B, for 1 ≤ t ≤ m. So, using

matrix multiplication

is an r × n matrix and it’s rows are a basis of Row(A). Therefore,

there exist αij ∈ ℂ,1 ≤ i ≤ m,1 ≤ j ≤ r such that A[t,:] = [αt1,…,αtr]B, for 1 ≤ t ≤ m. So, using

matrix multiplication

![⌊ A[1,:]⌋ ⌊ [α ,...,α ]B ⌋ ⌊ α ⋅⋅⋅ α ⌋

| . | | 11 . 1r | | 1.1 1.r|

A = |⌈ .. |⌉ = |⌈ .. |⌉ = CB = |⌈ .. ... .. |⌉B,

A [m, :] [αm1, ...,αmr]B αm1 ⋅⋅⋅ αmr](LA940x.png)

DRAFT

Remark 3.5.5. The proof also shows that for every A ∈ Mm×n(ℂ) of rank r there exists

matrices Br×n and Cm×r, each of rank r, such that A = CB.

DRAFT

Remark 3.5.5. The proof also shows that for every A ∈ Mm×n(ℂ) of rank r there exists

matrices Br×n and Cm×r, each of rank r, such that A = CB.

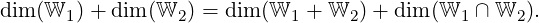

Let W1 and W1 be two subspaces of a vector space V over F. Then, recall that (see Exercise 3.1.24.4d) W1 + W2 = {u + v|u ∈ W1,v ∈ W2} = LS(W1 ∪ W2) is the smallest subspace of V containing both W1 and W2. We now state a result similar to a result in Venn diagram that states |A| + |B| = |A ∪ B| + |A ∩ B|, whenever the sets A and B are finite (for a proof, see Appendix 9.4.1).

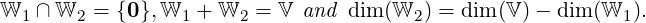

Theorem 3.5.6. Let V be a finite dimensional vector space over F. If W1 and W2 are two subspaces of V then

| (3.5.2) |

For better understanding, we give an example for finite subsets of ℝn. The example uses Theorem 3.3.9 to obtain bases of LS(S), for different choices S. The readers are advised to see Example 3.3.9 before proceeding further.

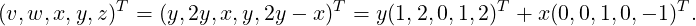

Example 3.5.7. Let V and W be two spaces with V = {(v,w,x,y,z)T ∈ ℝ5|v + x + z = 3y}

and W = {(v,w,x,y,z)T ∈ ℝ5|w - x = z,v = y}. Find bases of V and W containing a basis of

V ∩ W.

Solution: One can first find a basis of V ∩ W and then heuristically add a few vectors to get

bases for V and W, separately.

DRAFT

DRAFT

Alternatively, First find bases of V, W and V∩W, say  V ,

V , W and

W and  . Now, consider

S =

. Now, consider

S =  ∪

∪ V . This set is linearly dependent. So, obtain a linearly independent

subset of S that contains all the elements of

V . This set is linearly dependent. So, obtain a linearly independent

subset of S that contains all the elements of  . Similarly, do for T =

. Similarly, do for T =  ∪

∪ W .

W .

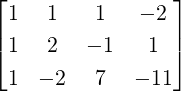

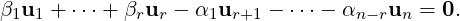

So, we first find a basis of V ∩ W. Note that (v,w,x,y,z)T ∈ V ∩ W if v,w,x,y and z satisfy v + x - 3y + z = 0,w - x - z = 0 and v = y. The solution of the system is given by

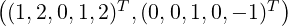

Thus,  = {(1,2,0,1,2)T ,(0,0,1,0,-1)T } is a basis of V ∩ W. Similarly, a basis of V is given by

= {(1,2,0,1,2)T ,(0,0,1,0,-1)T } is a basis of V ∩ W. Similarly, a basis of V is given by

= {(-1,0,1,0,0)T ,(0,1,0,0,0)T ,(3,0,0,1,0)T ,(-1,0,0,0,1)T } and that of W is given by

= {(-1,0,1,0,0)T ,(0,1,0,0,0)T ,(3,0,0,1,0)T ,(-1,0,0,0,1)T } and that of W is given by

= {(1,0,0,1,0)T ,(0,1,1,0,0)T ,(0,1,0,0,1)T }. To find the required basis form a matrix whose rows

are the vectors in

= {(1,0,0,1,0)T ,(0,1,1,0,0)T ,(0,1,0,0,1)T }. To find the required basis form a matrix whose rows

are the vectors in  ,

, and

and  (see Equation(3.5.3)) and apply row operations other than Eij. Then,

after a few row operations, we get

(see Equation(3.5.3)) and apply row operations other than Eij. Then,

after a few row operations, we get

| (3.5.3) |

Thus, a required basis of V is {(1,2,0,1,2)T ,(0,0,1,0,-1)T ,(0,1,0,0,0)T ,(0,0,0,1,3)T }. Similarly, a required basis of W is {(1,2,0,1,2)T ,(0,0,1,0,-1)T ,(0,1,0,0,1)T }.

DRAFT

DRAFT

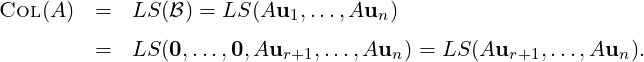

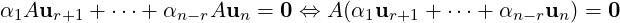

We now prove the rank-nullity theorem and give some of it’s consequences.

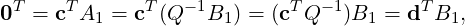

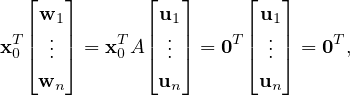

Proof. Let dim(Null(A)) = r ≤ n and let  = {u1,…,ur} be a basis of Null(A). Since

= {u1,…,ur} be a basis of Null(A). Since  is a

linearly independent set in ℝn, extend it to get

is a

linearly independent set in ℝn, extend it to get  = {u1,…,un} as a basis of ℝn. Then,

= {u1,…,un} as a basis of ℝn. Then,

= {Aur+1,…,Aun} spans Col(A). We further need to show that

= {Aur+1,…,Aun} spans Col(A). We further need to show that  is linearly independent. So,

consider the linear system

is linearly independent. So,

consider the linear system

| (3.5.5) |

in the variables α1,…,αn-r. Thus, α1ur+1 +  + αn-run ∈ Null(A) = LS(

+ αn-run ∈ Null(A) = LS( ). Therefore, there exist

scalars βi,1 ≤ i ≤ r, such that ∑

i=1n-rαiur+i = ∑

j=1rβjuj. Or equivalently,

). Therefore, there exist

scalars βi,1 ≤ i ≤ r, such that ∑

i=1n-rαiur+i = ∑

j=1rβjuj. Or equivalently,

| (3.5.6) |

DRAFT

DRAFT

As  is a linearly independent set, the only solution of Equation (3.5.6) is

is a linearly independent set, the only solution of Equation (3.5.6) is

Theorem 3.5.9 is part of what is known as the fundamental theorem of linear algebra (see Theorem 5.2.16). The following are some of the consequences of the rank-nullity theorem. The proofs are left as an exercise for the reader.

DRAFT

DRAFT

Let V be a vector space over ℂ with dim(V) = n, for some positive integer n. Also, let W be a subspace of V with dim(W) = k. Then, a basis of W may not look like a standard basis. Our problem may force us to look for some other basis. In such a case, it is always helpful to fix the vectors in a particular order and then concentrate only on the coefficients of the vectors as was done for the system of linear equations where we didn’t worry about the variables. It may also happen that k is very-very small as compared to n in which case it is better to work with k vectors in place of n vectors.

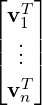

Definition 3.6.1. [Ordered Basis, Basis Matrix] Let W be a vector space over F with a

basis  = {u1,…,um}. Then, an ordered basis for W is a basis

= {u1,…,um}. Then, an ordered basis for W is a basis  together with a one-to-one

correspondence between

together with a one-to-one

correspondence between  and {1,2,…,m}. Since there is an order among the elements of

and {1,2,…,m}. Since there is an order among the elements of  ,

we write

,

we write  =

=  . The vector B = [u1,…,um] is an element of Wm and is generally

called the basis matrix.

. The vector B = [u1,…,um] is an element of Wm and is generally

called the basis matrix.

= (e1,e2,e3) of ℝ3. Then, B =

= (e1,e2,e3) of ℝ3. Then, B = ![[ ]

e1 e2 e3](LA963x.png) .

.

= (1,x,x2,x3) of ℝ[x;3]. Then, B =

= (1,x,x2,x3) of ℝ[x;3]. Then, B = ![[ ]

1 x x2 x3](LA964x.png) .

.

DRAFT

DRAFT

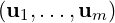

= (

= ( 12 -

12 - 21,

21, 13 -

13 - 31,

31, 23 -

23 - 32) of the set of 3 × 3

skew-symmetric matrices. Then, B =

32) of the set of 3 × 3

skew-symmetric matrices. Then, B =  .

. Thus,  = (u1,u2,…,um) is different from

= (u1,u2,…,um) is different from  = (u2,u3,…,um,u1) and both of them are

different from

= (u2,u3,…,um,u1) and both of them are

different from  = (um,um-1,…,u2,u1) even though they have the same set of vectors as

elements. We now define the notion of coordinates of a vector with respect to an ordered

basis.

= (um,um-1,…,u2,u1) even though they have the same set of vectors as

elements. We now define the notion of coordinates of a vector with respect to an ordered

basis.

Definition 3.6.3. [Coordinate Vector] Let B = [v1,…,vm] be the basis matrix corresponding to an

ordered basis  of W. Since

of W. Since  is a basis of W, for each v ∈ W, there exist βi,1 ≤ i ≤ m, such that

v = ∑

i=1mβivi = B

is a basis of W, for each v ∈ W, there exist βi,1 ≤ i ≤ m, such that

v = ∑

i=1mβivi = B . The vector

. The vector  , denoted [v]

, denoted [v] , is called the coordinate vector of v

with respect to

, is called the coordinate vector of v

with respect to  . Thus,

. Thus,

![⌊ ⌋

v1

T| .|

v = B[v]B = [v1,...,vm ][v]B, or equivalently, v = [v]B|⌈ ..|⌉.

v

m](LA970x.png) | (3.6.1) |

The last expression is generally viewed as a symbolic expression.

= (1,x,x2,x3) is an ordered basis of ℝ[x;3] then,

= (1,x,x2,x3) is an ordered basis of ℝ[x;3] then,

![⌊ ⌋ ⌊ ⌋

1 1

[ ]|| || [ ]|| ||

[f(x)]B = 1 x x2 x3 |1| = 1 1 0 1 | x |.

|⌈0|⌉ |⌈ x2|⌉

1 x3](LA973x.png)

![u0(x) = 1,u1(x) = x and u2 (x ) = 3-x2 - 1, for all x ∈ [- 1,1].

2 2](LA974x.png)

= (u0(x),u1(x),u2(x)) forms an ordered basis of ℝ[x;2]. Note that these polynomials

satisfy the following conditions:

= (u0(x),u1(x),u2(x)) forms an ordered basis of ℝ[x;2]. Note that these polynomials

satisfy the following conditions:

Verify that

DRAFT

DRAFT

![⌊ ⌋ ⌊ ⌋

a0 + a2- u0 (x )

2 [ ]| 3| [ a2 2a2]| |

[a0 + a1x + a2x ]B = u0 (x) u1 (x ) u2 (x )⌈ a1 ⌉ = a0 + 3 a1 3 ⌈u1 (x )⌉.

2a32 u2 (x )](LA975x.png)

then, A = X + Y , where X =

then, A = X + Y , where X =  and Y =

and Y =  .

.

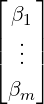

=

=  is an ordered basis of M3(ℝ) then

is an ordered basis of M3(ℝ) then

![[ ]

[A ]TB = 1 2 3 2 1 3 3 1 4 .](LA983x.png)

=

=  is an ordered basis of U then,

[X]

is an ordered basis of U then,

[X] T =

T = ![[ ]

1 2 3 1 2 4](LA985x.png) .

.

=

=  is an ordered basis of W then, [Y ]

is an ordered basis of W then, [Y ] T =

T =

![[ ]

0 0 - 1](LA987x.png) .

.Thus, in general, any matrix A ∈ Mm,n(ℝ) can be mapped to ℝmn with respect to the ordered

basis  =

=  and vise-versa.

and vise-versa.

DRAFT

DRAFT

=

=  can be taken as an ordered basis of V. In this case,

[(3,6,0,3,1)]

can be taken as an ordered basis of V. In this case,

[(3,6,0,3,1)] =

= ![[ ]

3

5](LA990x.png) .

.Remark 3.6.5. [Basis representation of v]

be an ordered basis of a vector space V over F of dimension n.

be an ordered basis of a vector space V over F of dimension n.

![[αv + w ]B = α[v]B + [w ]B, for all α ∈ F and v, w ∈ V.](LA993x.png)

,…,[wm]

,…,[wm] } is linearly independent in Fn.

} is linearly independent in Fn.

![B[v] = v = (BB -1)v = B (B -1v), for every v ∈ V.

B

<img

src=](LA994x.png)

DRAFT

" class="math-display" >

DRAFT

" class="math-display" > | (3.6.2) |

As B is invertible, [v] = B-1v, for every v ∈ V.

= B-1v, for every v ∈ V.

Definition 3.6.6. [Change of Basis Matrix] Let V be a vector space over F with dim(V) = n.

Let A = [v1,…,vn] and B = [u1,…,un] be basis matrices corresponding to the ordered bases  and

and  , respectively, of V. Thus, using Equation (3.6.1), we have

, respectively, of V. Thus, using Equation (3.6.1), we have

![[v1,...,vn ] = [B [v1]B, ...,B [vn]B ] = B [[v1]B,...,[vn ]B ] = B [A ]B,](LA997x.png)

]

] =

= ![[[v1]B,...,[vn]B]](LA998x.png) . Or equivalently, verify the symbolic equality

. Or equivalently, verify the symbolic equality

![⌊ ⌋ ⌊ ⌋

| v1| | u1|

| ... | = [A]TB| ... |.

⌈ ⌉ ⌈ ⌉

vn un](LA999x.png) | (3.6.3) |

The matrix [ ]

] is called the matrix of

is called the matrix of  with respect to the ordered basis

with respect to the ordered basis  or the change of

basis matrix from

or the change of

basis matrix from  to

to  .

.

We now summarize the above discussion.

DRAFT

Theorem 3.6.7. Let V be a vector space over F with dim(V) = n. Further, let

DRAFT

Theorem 3.6.7. Let V be a vector space over F with dim(V) = n. Further, let  = (v1,…,vn) and

= (v1,…,vn) and

= (u1,…,un) be two ordered bases of V

= (u1,…,un) be two ordered bases of V

]

] is invertible.

is invertible.

]

] is invertible.

is invertible.

= [

= [ ]

] [x]

[x] , for all x ∈ V. Thus, again note that the matrix [

, for all x ∈ V. Thus, again note that the matrix [ ]

] takes

coordinate vector of x with respect to

takes

coordinate vector of x with respect to  to the coordinate vector of x with respect to

to the coordinate vector of x with respect to  .

Hence, [

.

Hence, [ ]

] was called the change of basis matrix from

was called the change of basis matrix from  to

to  .

.

= [

= [ ]

] [x]

[x] , for all x ∈ V.

, for all x ∈ V.

]

] )-1 = [

)-1 = [ ]

] .

.Proof. Part 1: Note that using Equation (3.6.3), we have  = [

= [ ]

] T

T  and hence by

Exercise 3.3.13.8, the matrix [

and hence by

Exercise 3.3.13.8, the matrix [ ]

] T or equivalently [

T or equivalently [ ]

] is invertible, which proves Part 1. A similar

argument gives Part 2.

is invertible, which proves Part 1. A similar

argument gives Part 2.

Part 3: Using Equations (3.6.1) and (3.6.3). for any x ∈ V, we have

![⌊ ⌋ ⌊ ⌋ ⌊ ⌋

| u1| |v1 | |u1 |

[x]TB| ... | = x = [x]TA| ... | = [x ]TA [A ]TB | ... |.

⌈ ⌉ ⌈ ⌉ ⌈ ⌉

un vn un](LA1004x.png)

T = [x]

T = [x] T [

T [ ]

] T . Or equivalently,

[x]

T . Or equivalently,

[x] = [

= [ ]

] [x]

[x] . This completes the proof of Part 3. We leave the proof of other parts to the

reader. _

. This completes the proof of Part 3. We leave the proof of other parts to the

reader. _

= (e1,…,en) be the standard ordered basis. Then

= (e1,…,en) be the standard ordered basis. Then

![[A] = [[v ] ,...,[v ]] = [v ,...,v ] = A.

B 1 B nB 1 n](LA1007x.png)

=

= ![( [ ] [ ])

1 1

1 , 2](LA1008x.png) be an ordered basis of ℝ2. Then, by Remark 3.6.5.2, B =

be an ordered basis of ℝ2. Then, by Remark 3.6.5.2, B = ![[ ]

1 1

1 2](LA1009x.png) is an invertible matrix. Thus, verify that

is an invertible matrix. Thus, verify that ![[ ]

π

e](LA1010x.png)

=

= ![[ ]

1 1

1 2](LA1011x.png) -1

-1![[ ]

π

e](LA1012x.png) .

.

=

=  (1,0,0)T ,(1,1,0)T ,(1,1,1)T

(1,0,0)T ,(1,1,0)T ,(1,1,1)T  and

and  =

=  (1,1,1)T ,(1,-1,1)T ,(1,1,0)T

(1,1,1)T ,(1,-1,1)T ,(1,1,0)T  are two

ordered bases of ℝ3. Then, we verify the statements in the previous result.

are two

ordered bases of ℝ3. Then, we verify the statements in the previous result.

Remark 3.6.9. Let V be a vector space over F with  = (v1,…,vn) as an ordered basis. Then, by

Theorem 3.6.7, [v]

= (v1,…,vn) as an ordered basis. Then, by

Theorem 3.6.7, [v] is an element of Fn, for each v ∈ V. Therefore,

is an element of Fn, for each v ∈ V. Therefore,

DRAFT

DRAFT

Exercise 3.6.10. Let  =

=  (1,2,0)T ,(1,3,2)T ,(0,1,3)T

(1,2,0)T ,(1,3,2)T ,(0,1,3)T  and

and  =

=  (1,2,1)T ,(0,1,2)T ,(1,4,6)T

(1,2,1)T ,(0,1,2)T ,(1,4,6)T  be

two ordered bases of ℝ3.

be

two ordered bases of ℝ3.

to

to  .

.

to

to  .

.

. What do you

notice?

. What do you

notice?Is it true that PQ = I = QP? Give reasons for your answer.

In this chapter, we defined vector spaces over F. The set F was either ℝ or ℂ. To define a vector space, we start with a non-empty set V of vectors and F the set of scalars. We also needed to do the following:

If all conditions in Definition 3.1.1 are satisfied then V is a vector space over F. If W was a

non-empty subset of a vector space V over F then for W to be a space, we only need to check

whether the vector addition and scalar multiplication inherited from that in V hold in

W.

DRAFT

DRAFT

We then learnt linear combination of vectors and the linear span of vectors. It was also

shown that the linear span of a subset S of a vector space V is the smallest subspace of V

containing S. Also, to check whether a given vector v is a linear combination of u1,…,un,

we needed to solve the linear system c1u1 +  + cnun = v in the variables c1,…,cn. Or

equivalently, the system Ax = b, where in some sense A[:,i] = ui, 1 ≤ i ≤ n, xT = [c1,…,cn]

and b = v. It was also shown that the geometrical representation of the linear span of

S = {u1,…,un} is equivalent to finding conditions in the entries of b such that Ax = b was always

consistent.

+ cnun = v in the variables c1,…,cn. Or

equivalently, the system Ax = b, where in some sense A[:,i] = ui, 1 ≤ i ≤ n, xT = [c1,…,cn]

and b = v. It was also shown that the geometrical representation of the linear span of

S = {u1,…,un} is equivalent to finding conditions in the entries of b such that Ax = b was always

consistent.

Then, we learnt linear independence and dependence. A set S = {u1,…,un} is linearly independent set in the vector space V over F if the homogeneous system Ax = 0 has only the trivial solution in F. Else S is linearly dependent, where as before the columns of A correspond to the vectors ui’s.

We then talked about the maximal linearly independent set (coming from the homogeneous system) and the minimal spanning set (coming from the non-homogeneous system) and culminating in the notion of the basis of a finite dimensional vector space V over F. The following important results were proved.

This number was defined as the dimension of V, denoted dim(V).

Now let A ∈ Mn(ℝ). Then, combining a few results from the previous chapter, we have the following equivalent conditions.

DRAFT

DRAFT

DRAFT

DRAFT

DRAFT

DRAFT

DRAFT

DRAFT